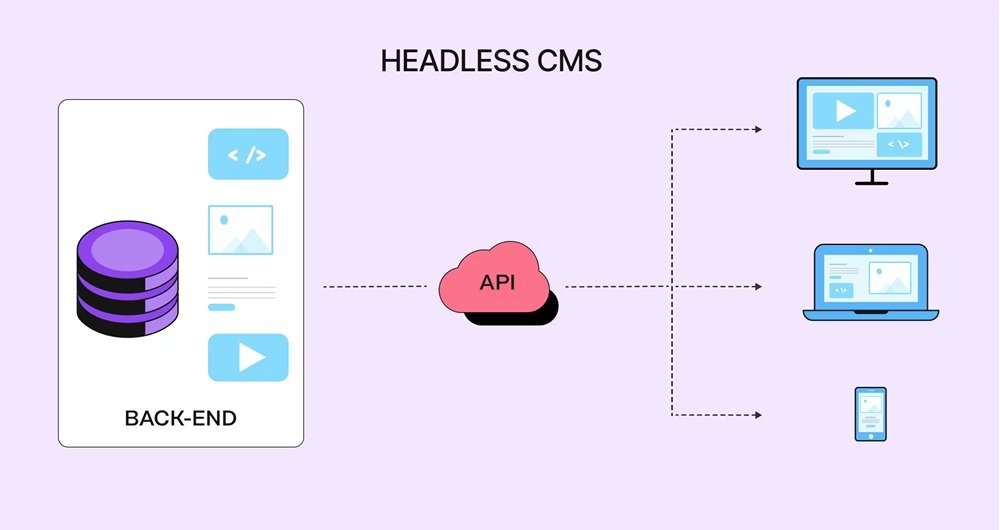

With the rise of headless CMS deployments, the security of API endpoints is paramount. In a headless architecture, APIs are the primary means of content retrieval and delivery across websites, applications and digital touchpoints. Since endpoints are publicly exposed, they need to be secured to ensure content integrity, user data security and system architecture flexibility. APIs are becoming more vulnerable to attacks by bad actors looking for quick access to sensitive information and important systems, meaning adequate security is crucial from a foundational, trusted, compliant and operational perspective. This article will address how to secure API endpoints in a headless CMS deployment and how organizations can secure their organizations now and for the future.

Security Implications of Headless API-First Integrations

In a traditional monolithic CMS architecture, content is typically stored behind a server-rendered page, and not as many entry points are exposed to exploit. In a headless CMS, however, the API is one of the most important parts of content delivery, and security missteps could grant attackers access to exploit, steal data, or trigger denial-of-service attacks. Storyblok’s flexible content management fits into this landscape by emphasizing structured access, clear permission models, and predictable API behavior that supports secure implementations. Specific API security vulnerabilities include brute-force attacks, token theft, excessive permissions, misconfigured settings, rate-limit evasion, and injections. APIs are made to be remote and programmatically accessed, meaning if they’re left unprotected, they could easily be accessed by automated bots using exploitative measures. Vulnerabilities should always be identified from the start so security can be applied as part of the integrated headless ecosystem. The earlier vulnerabilities are acknowledged, the earlier they’re remediated and built into the logic of the headless program rather than added as after-the-fact security adjustments.

Robust Authentication Requirements for API Access

Authentication is the first step to securing API endpoints and establishing a firm barrier for entry. Strong authentication measures – OAuth 2.0 authentication flow; API keys; JSON Web Tokens (JWT); mutually authenticated TLS – ensure that only appropriate systems/users are allowed into the CMS. Token-based identification provides temporary credentials that are encrypted and not persistent (they expire or return errors to hackers who would otherwise try to access them). Tokens are also rotated frequently, requires expirations, and needs multifactor authentication for additional protection. For those APIs that are public-facing, client authentication layers should be used to avoid data scraping and mass requests from malicious third parties without proper permissions. Authentication is like providing a key to the front door; if authentication is strong enough, those without the key will never even come close.

Role-Based Permissions Manage What Users/Clients Can Do

If someone gets in the front door, it’s essential to know how much access they have to roam about the house. While authentication serves as the best opening protection; permissions dictate what a user or system can do within the API. Role-based access control (RBAC) allows organizations to assign specific permissions based on user groups/API consumers that only allow what is required. For example, a developer might have read-write access within a staging environment but limited access once pushed to production. An external integration might have limited permissions to only certain collections/endpoints relative to their functions. This limits damage in the event that credentials are compromised as well as ensures compliance with governance policies. For large teams and complex deployment situations, fine-grained permissions are necessary to keep large teams productive and effectively managing the headless approach.

Ensuring Transport Security of API Calls

One of the best ways to safeguard data from eavesdropping is securing all information traversing the network. This means ensuring that all your APIs run on HTTPS with TLS 1.2+ at a minimum. Without a private line, bad actors can intercept traffic, hijack credentials, or modify responses via man-in-the-middle (MITM) attacks. Additionally, beyond transport encryption, organizations might want to leverage response signing or payload validation and even certificate pinning to ensure enhanced integrity for HTTPS and trusted sends that cannot be manipulated at a later point. For secured payloads or business-critical data, mutually authenticated TLS is a best practice. Encryption and transport security keep API calls private and prevent intercepted traffic from occurring in the first place.

Leveraging Rate Limits to Prevent Abuse

Rate limiting an API is one of the best ways to avoid abuse. Without it, clients gone awry or malicious actors can overload endpoints with too many requests or data submissions. This can cripple an API through performance failures or forced downtime. Setting appropriate request limits per user, user agent, or system ensures that your APIs stay up and running with necessary restrictions during potentially overwhelming activity levels. Rate limits also provide invaluable insight into per-user or per-system use that highlights odd behavior that might signal attempted compromise. Sometimes when paired with throttling and request queuing or even automatic blocking for repeat offenders, rate limits are a first line of defense against any denial-of-service concern. For headless CMS implementations within scaled environments, rate limits are as much for performance as they are for safety.

Validating Input for Trusted Transmission

Input validation means ensuring that all data received from API requests is sanitized, appropriately formatted, and non-malicious. Injection attacks such as SQL injection, script injection, malformed payloads or what comes with an unexpected field can exploit the safest APIs if an organization fails to vet input. Important aspects of validation include mandatory schema requirements, denial of effort if unrecognized fields are present, data type validation or an unexpected character, or input sanitization for any string type. Organizations need to implement these solutions on both the server side for validation and client side for filtering. Validation takes place through schema validation libraries, firewalls and API gateways to ensure better defense for all applicable enterprises. Strong input validation reduces the attack surface incredibly and prevents common vulnerabilities that target APIs as a whole.

Use Your API Gateway for Security & Monitoring

An API gateway sits in front of your headless endpoints to ensure they aren’t exposed to bad requests. The API gateway covers authentication, authorization, throttling, logging and request filtering – if it gets through to the CMS, it was a valid request, vetted and approved. Simultaneously, an API gateway provides centralized monitoring options so that security teams understand where and when to troubleshoot and where their findings need to be explored. Some premium API gateways even have anomaly detection, geo-blocking, IP allowlisting and other automatic threat responses. The more requests an enterprise gets within its headless spaces, the more it makes sense to centralize access management through an API gateway. For enterprise-sized headless installations, an API gateway is an obvious option to keep everything consistent and secure among the spaces.

Monitor The API in Use for Security Detection & Performance Insights

When security teams get calls for potential threats, performance monitoring enters the operational visibility realm. When the API is in use on the front end, thousands of requests per day can be monitored and assessed for expected versus extraordinary use. For example, if too many requests are coming from a known region IP or too many failing authentications are coming from a singular IP, a security team needs to take action quickly to save time before a breach can happen. Furthermore, by monitoring the API in use and correlating performance metrics with security detections, teams can find slow-feed endpoints that can be eased by payload or caching capabilities. Monitoring should go hand-in-hand with alerting to raise concerns as they happen but the more companies understand how their content will be accessed across channels through monitoring, the better they’ll be positioned for security and performance.

Keep Secrets in Check

API keys, tokens and other credentials should never be exposed to bad access because if they are, breached will happen from bad user behavior. For example, hardcoding secrets into centralized code repositories or failing to take them off unsecured front-end displays is not favorable. Secrets should be kept through modern means (vault services), inaccessible environments, advanced key rotation strategies. Automation ensures that humans don’t mess up what humans need to do to keep secrets from themselves. Distributed systems with proper cross-system secured access will keep CMS endpoints and spaces intact.

Why API Endpoint Security Is Critical for a Headless Implementation

When a headless CMS implementation is in your organization’s digital future, supporting API security is crucial to its success. Since the content served from a headless CMS goes to various endpoints – subdomains, partner applications, omnichannel solutions, and even kiosks – attackers try to exploit unsecured APIs at scale and through generalized vulnerability. They can access malicious content, compromise personal information, or obliterate their user experience. By securing API endpoints throughout deployment and maintenance, organizations ensure continued content delivery under safe circumstances. This includes compliance regulations that ensure trustworthiness for users and stability for the platforms through heavy usage. With endpoint access limited to only those with secure credentials through multiple layers like authentication, encryption, ACLs, and logging, organizations create a valuable hybrid foundation for future needs. In an age of digital dependence on third-party facilitated delivery, the endpoints that make this possible must be secured.

IP Allowlisting and Network Restrictions

IP allowlisting and networking restrictions are two robust layers of defense that apply to the headless CMS endpoints. Instead of launching APIs for everyone on the internet, limits can be placed on specific IP ranges – in the case of interconnection, organizations, CDNs, or edge computing’s serverless functions. This prevents unauthorized traffic and automated attacks by limiting where requests can come from. Similarly, virtual private networks, private links, or specific firewall rules can restrict who gets access to what private endpoints. The goal here is to limit the surface area and provide IP and network controls that prevent hostile actors from getting even near the API layer in the first place.

Web Application Firewalls and Bot Protection

Web application firewalls provide protection against many entry point attacks. Whether it’s an injection attack (malicious code), cross-site scripting requests, malformed payloads or bot-driven credential stuffing attempts to access admin functionalities if they’re exposed – WAFs act as a great intermediary solution for headless CMS APIs. Similarly, bot protection that uses behavioral analysis, rate shaping and device fingerprinting blocks automated scraping mechanisms or DDoS attempts before they can do any real damage. By adding noise reduction and min-maxing requests to interface with the API at a valid level, organizations can reduce risk for any headless implementation that sees significant traffic over time.

Protecting Media and Asset Endpoints with Signed URLs and Expiration Policies

Another vulnerability to api security involves asset endpoints, or media endpoints. Images, video files, and downloadable files can all be repeatedly accessed or used against the organization, thus making them a target. Signed URLs allow the organization to trust that only users with the proper, authenticated means of access within limited time windows can access media. Expiration policies limit how long a shared link can be useful, making it less of an issue if a URL is leaked or sent in error. Organizations can further limit access to media with IP restrictions, watermarking, or even tiered access for premium assets. Protecting media access the same way as data APIs helps teams protect intellectual property, limit bandwidth overages and ensures a tighter ship when it comes to content exploitation.

Incident Recovery and Response Plans Should Be Established Beforehand

No matter how many protections are in place, security incidents happen. Because systems are never foolproof, having an incident recovery and response plan ensures that organizations know how to behave when something goes wrong. Tokens may need to be revoked and rotated; compromised endpoints may need restricting; previous API access configurations may need restoration; investigative security teams may need forensic analysis of malicious codes; etc. The incident response plan should clearly delineate responsibilities across Dev, security, legal and communication teams to avoid any confusion in the middle of a high-stakes situation, especially in a headless CMS deployment where one api powers multiple front-end experiences at once. This includes creating mock incidents, obtaining automated alerts and establishing detailed documentation.